-

![]()

Advanced Product Options Suite

A feature-rich and highly customizable solution to set and manage Magento product options. Display your product variations beautifully and accurately. -

![]()

SEO Suite Ultimate

The pioneer Magento SEO extension, instantly enhanced and updated to comply with the continuous changes in the SEO world. All-in-one Adobe Commerce SEO toolkit. -

![]()

Shipping Suite

All you could possibly need to build a Magento shipping system. Shipping methods, rates, carriers, shipping cost calculator, zip code validation. -

![]()

Ultimate Sales Boost

Need to give your Magento store an ultimate sales boost? Build urgency, scarcity, and trust with the help of countdown timers, products popularity popups, stock status, etc. -

![]()

Order Management

Magento delete orders functionality. Editing any order details without having to cancel orders. Adding 28 extra order parameters and 20 extra order mass actions. Staff access levels. -

![]()

Store Locator & In-Store Pickup

Magento 2 Store Locator extension to introduce BOPIS. No-contact delivery. Curbside pickup. Items’ availability tracking. Store locations on Google Maps. -

![]()

Checkout Suite

Magento One Page Checkout extension to introduce a delivery-oriented checkout flow. Comes with integrated Delivery Date and Store Locator & In-Store Pickup. -

![]()

Delivery Date & Time

All you need to display estimated shipment dates in your Magento-based store. Checkout page delivery. Shipping date restrictions and extra charges for specific time slots. -

![]()

Customer Prices Suite

The toolkit to personalize Magento prices and discounts. Shopper- and group-specific customer prices. Individual and group promotions. Updating prices in bulk.

-

![]()

SEO Suite Ultimate

The pioneer Magento SEO extension, instantly enhanced and updated to comply with the continuous changes in the SEO world. All-in-one Adobe Commerce SEO toolkit. -

![]()

Landing Pages

The Landing Pages Magento extension allows you to create SEO- and user-friendly landing pages for your marketing campaigns. Pages creation in bulk. -

![]()

Layered Navigation

A set of flexible features to make Magento layered navigation search engine and user-friendly. Advanced configuration of filter attributes. -

![]()

Extended Rich Snippets

Draw users' attention and win the click with more detailed Magento rich snippets. Developed according to the latest Google and Schema.org standards. -

![]()

SEO Meta Templates

The Magento 2 SEO Meta Tags Template module to optimize product and category page metadata, keywords, short and detailed descriptions. -

![]()

Cross Linking

All you need to create and manage Magento 2 cross-linking SEO. Internal links optimization on the product, category, and CMS pages. Linking to relevant external sources. -

![]()

Sitemap Suite

XML and HTML Magento 2 sitemaps to enhance your site’s crawling, indexation, and navigation. Magento 2 Cron for automatic sitemaps navigation. -

![]()

Short Category & Product URLs

Make product and category pages better crawled by the search engine spiders. Use the Magento Short URL extension to remove parent categories from URLs.

-

![]()

Shipping Suite

All you could possibly need to build a Magento shipping system. Shipping methods, rates, carriers, shipping cost calculator, zip code validation. -

![]()

Store Locator & In-Store Pickup

Magento 2 Store Locator extension to introduce BOPIS. No-contact delivery. Curbside pickup. Items’ availability tracking. Store locations on Google Maps. -

![]()

Delivery Date & Time

All you need to display estimated shipment dates in your Magento-based store. Checkout page delivery. Shipping date restrictions and extra charges for specific time slots. -

![]()

Shipping Table Rates

With our Magento 2 Shipping Table Rates extension, you can overcome the default limitations and create an unlimited number of carriers, methods, and shipping rates. -

![]()

Shipping Calculator on Product Page

Increase conversion rates and improve customer experience by letting your shoppers calculate the cost of shipping right on your product pages. Estimated shipping block. -

![]()

Green Delivery

This Green Delivery module helps quickly offer such an option in your Magento-based store. Multi-store and multi-language support. -

![]()

No-Contact Delivery / Curbside Pickup

This Free Curbside Pickup module helps quickly offer the no-contact delivery option in your Magento-based store. Multi-store and multi-language support.

-

![]()

Checkout Suite

Magento One Page Checkout extension to introduce a delivery-oriented checkout flow. Comes with integrated Delivery Date and Store Locator & In-Store Pickup. -

![]()

Shipping Suite

All you could possibly need to build a Magento shipping system. Shipping methods, rates, carriers, shipping cost calculator, zip code validation. -

![]()

Multi Fees

Magento 2 extra fee extension that allows you to set up any Magento fee, including product, shopping cart, shipping methods, license, handling, and transaction fees. -

![]()

Reward Points

Build a Magento 2 reward points program that works! Reward your most active and loyal customers, motivate hesitating ones and generate more profit for each order. -

![]()

Gift Cards

Online and offline Magento 2 gift card giving made easy. Flexible pricing schemes, multi-store gift cards, flexible pricing configuration possibilities. -

![]()

Store Locator & In-Store Pickup

Magento 2 Store Locator extension to introduce BOPIS. No-contact delivery. Curbside pickup. Items’ availability tracking. Store locations on Google Maps. -

![]()

Delivery Date & Time

All you need to display estimated shipment dates in your Magento-based store. Checkout page delivery. Shipping date restrictions and extra charges for specific time slots. -

![]()

Green Delivery

This Green Delivery module helps quickly offer such an option in your Magento-based store. Multi-store and multi-language support. -

![]()

No-Contact Delivery / Curbside Pickup

This Free Curbside Pickup module helps quickly offer the no-contact delivery option in your Magento-based store. Multi-store and multi-language support.

-

![]()

Marketing & Sales Suite

There’s no better tool to tackle Magento 2 marketing automation than this suite. Rewards program, recent sales notifications, countdown timers, review reminders, discounts. -

![]()

Customer Prices Suite

The toolkit to personalize Magento prices and discounts. Shopper- and group-specific customer prices. Individual and group promotions. Updating prices in bulk. -

![]()

Gift Cards

Online and offline Magento 2 gift card giving made easy. Flexible pricing schemes, multi-store gift cards, flexible pricing configuration possibilities. -

![]()

Reward Points

Build a Magento 2 reward points program that works! Reward your most active and loyal customers, motivate hesitating ones and generate more profit for each order. -

![]()

Multi Fees

Magento 2 extra fee extension that allows you to set up any Magento fee, including product, shopping cart, shipping methods, license, handling, and transaction fees. -

![]()

Ultimate Sales Boost

Need to give your Magento store an ultimate sales boost? Build urgency, scarcity, and trust with the help of countdown timers, products popularity popups, stock status, etc. -

![]()

Advanced Product Reviews & Reminders

Use Magento 2 Review Reminder to increase trust in your brand by transforming Magento 2 reviews into advanced product feedback. Trigger email/popup review reminders. -

![]()

Product Countdown Timers

Magento 2 Countdown Timer extension to add beautifully visualized timers to your product pages. The module comes with 20+ highly customizable design templates. -

![]()

Affiliate

Magento 2 Affiliate module helps you launch, run and manage flexible affiliate marketing programs. It allows you to effectively market your products with a low budget, low effort, and time while enjoying high ROI.

-

![]()

Advanced Product Options Suite

A feature-rich and highly customizable solution to set and manage Magento product options. Display your product variations beautifully and accurately. -

![]()

File Downloads and Product Attachments

Add any kind and any format of Magento 2 product attachments to your product pages―equip them with product videos, user guides, price lists, etc. -

![]()

Customer Prices Suite

The toolkit to personalize Magento prices and discounts. Shopper- and group-specific customer prices. Individual and group promotions. Updating prices in bulk. -

![]()

Customer Group Prices

Diversify your pricing strategy with custom Magento customer group prices. Set custom prices for wholesale and retail shoppers. Special deals for targeted groups. -

![]()

Prices per Customer

Add a personalized touch to your Magento pricing with the help of this Magento Price per Customer module. Mass update customer prices. Prices import/export. -

![]()

Product Custom Options Templates

Ease the pain of manual Magento custom options configuration. Create any number of custom options templates like color, size, dimensions, etc., in a few clicks. -

![]()

Shipping Calculator on Product Page

Increase conversion rates and improve customer experience by letting your shoppers calculate the cost of shipping right on your product pages. Estimated shipping block. -

![]()

Event Management by Staylime

Create, manage and sell Magento events. Let shoppers search for, wishlist, review any type of events as Magento products: conferences, concerts, parties, etc.

-

![]()

Order Management

Magento delete orders functionality. Editing any order details without having to cancel orders. Adding 28 extra order parameters and 20 extra order mass actions. Staff access levels. -

![]()

Order Editor

Magento edit order without canceling. Add, delete, and remove any order details, order customers' billing, payment, and shipping info. Order edit logs and staff permissions. -

![]()

Extended Orders Grid

Extend and customize the default Magento 2 orders grid. Easily add 28 extra colums with essential order parameters, perform order mass-actions, synch order data and more. -

![]()

Custom Order Number

Expand the native numbering scheme with the Magento 2 Custom Order Number module. Edit order length, add custom prefixes, increment prefixes, etc.

-

![]()

SEO Suite Ultimate

The pioneer Magento SEO extension, instantly enhanced and updated to comply with the continuous changes in the SEO world. All-in-one Adobe Commerce SEO toolkit. -

![]()

Advanced Product Options Suite

A feature-rich and highly customizable solution to set and manage Magento product options. Display your product variations beautifully and accurately. -

![]()

Gift Cards

Online and offline Magento 2 gift card giving made easy. Flexible pricing schemes, multi-store gift cards, flexible pricing configuration possibilities. -

![]()

Delivery Date & Time

All you need to display estimated shipment dates in your Magento-based store. Checkout page delivery. Shipping date restrictions and extra charges for specific time slots. -

![]()

File Downloads and Product Attachments

Add any kind and any format of Magento 2 product attachments to your product pages―equip them with product videos, user guides, price lists, etc. -

![]()

Donations Suite

Use the power of giving back to bring goodness to the world and gain business benefits ― possible with this Magento 2 Donation extension. Micro donations. Gift Aid support.

-

![]()

Shipping Suite

All you could possibly need to build a Magento shipping system. Shipping methods, rates, carriers, shipping cost calculator, zip code validation. -

![]()

SEO Suite Ultimate

The pioneer Magento SEO extension, instantly enhanced and updated to comply with the continuous changes in the SEO world. All-in-one Adobe Commerce SEO toolkit. -

![]()

Advanced Product Options Suite

A feature-rich and highly customizable solution to set and manage Magento product options. Display your product variations beautifully and accurately. -

![]()

Gift Cards

Online and offline Magento 2 gift card giving made easy. Flexible pricing schemes, multi-store gift cards, flexible pricing configuration possibilities. -

![]()

Multi Fees

Magento 2 extra fee extension that allows you to set up any Magento fee, including product, shopping cart, shipping methods, license, handling, and transaction fees. -

![]()

Reward Points

Build a Magento 2 reward points program that works! Reward your most active and loyal customers, motivate hesitating ones and generate more profit for each order. -

![]()

Store Locator & In-Store Pickup

Magento 2 Store Locator extension to introduce BOPIS. No-contact delivery. Curbside pickup. Items’ availability tracking. Store locations on Google Maps. -

![]()

Delivery Date & Time

All you need to display estimated shipment dates in your Magento-based store. Checkout page delivery. Shipping date restrictions and extra charges for specific time slots. -

![]()

File Downloads and Product Attachments

Add any kind and any format of Magento 2 product attachments to your product pages―equip them with product videos, user guides, price lists, etc.

-

![]()

Search Autocomplete FREE

Immediate product Magento autocomplete search results. Let your customers instantly find what they are looking for. Customizable AJAX popup. -

![]()

Others Also Bought FREE

Enjoy a quick and easy way of filling in the Magento 2 Related Products block. The extension allows you to display relevant products and apply shoppers’ data manually. -

![]()

Geo Lock FREE

The Magento 2 Geo Lock free extension helps manage access to your store for specific locations by detecting customers' IPs. Displays 403 access denied message. -

![]()

Green Delivery

This Green Delivery module helps quickly offer such an option in your Magento-based store. Multi-store and multi-language support. -

![]()

No-Contact Delivery / Curbside Pickup

This Free Curbside Pickup module helps quickly offer the no-contact delivery option in your Magento-based store. Multi-store and multi-language support.

-

![]()

Magento development

Full-cycle Magento 2 development taken care of. -

![]()

Magento migration

End-to-end migration from Magento 1 to Magento 2. -

![]()

Shopify development

Full-fledged Shopify store implementation. -

![]()

Magento maintenance & support

Ad hoc support and ongoing maintenance services for your store. -

![]()

Magento design

Unique Magento theme design and development. -

![]()

SEO Suite Ultimate configuration

Advanced configuration of our best-selling SEO extension.

Magento 2 Wiki

Robots.txt Configuration in Magento 2. Nofollow and Noindex

Magento 2 Robots.txt

Robots exclusion standard, also known as "robots.txt file", is important for your website or store when communicating with search engines crawlers. This standard defines how to inform the bots about the pages of your site that should be excluded from scanning or, visa versa, opened for crawling. That’s why robots.txt file is significant for the correct website indexation and its overall search visibility.

Magento 2 store owners utilize the **robots.txt** file to

- Exclude potential duplicate content: Magento 2 sites can prevent search engine penalties caused by redundant content using the `Disallow:` directive in robots.txt.

- Ensure privacy for admin or confidential directories: safeguard confidential Magento 2 directories from indexing.

- Prevent indexing of sections under development or testing.

- Server Strain: Restrict bots from heavy pages to minimize server loads.

Tips for Magento 2 Robots.txt file

- Always position the robots.txt file in the root directory.

- For Magento 2 stores utilize the platform's in-built settings to manage the robots.txt file with ease.

- Consistently review and modify the robots.txt file according to the changes in your Magento 2 store.

How to configure Robots.txt file in Magento 2

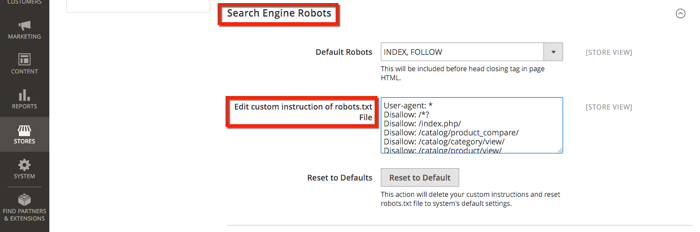

By default, Magento 2 allows you to generate and configure robots.txt files. You can prefer to use the default indexation settings or specify custom instructions for different search engines.

To configure the robots.txt file follow these steps:

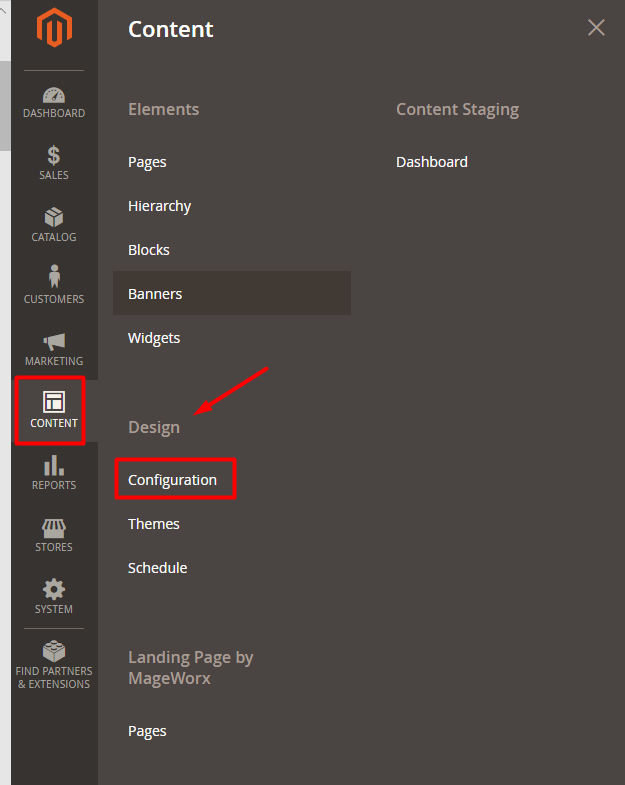

1. Open Content tab, select Design option and select the Configuration.

Examples of robots.txt file

We recommend using the following custom robots.txt for your Magento 2 store:

User-agent: *

# Directories

Disallow: /404/

Disallow: /app/

Disallow: /cgi-bin/

Disallow: /downloader/

Disallow: /errors/

Disallow: /includes/

Disallow: /lib/

Disallow: /magento/

Disallow: /media/captcha/

# Paths (clean URLs)

Disallow: /index.php/

Disallow: /catalog/product_compare/

Disallow: /catalog/category/view/

Disallow: /catalog/product/view/

Disallow: /catalog/product/gallery/

Disallow: /catalogsearch/

Disallow: /checkout/

Disallow: /control/

Disallow: /customer/

Disallow: /customize/

Disallow: /sendfriend/

Disallow: /ajaxcart/

Disallow: /ajax/

Disallow: /quickview/

Disallow: /productalert/

Disallow: /sales/guest/form/

# Files

Disallow: /cron.php

Disallow: /cron.sh

Disallow: /error_log

Disallow: /install.php

Disallow: /LICENSE.html

Disallow: /LICENSE.txt

Disallow: /LICENSE_AFL.txt

Disallow: /STATUS.txt

Disallow: /get.php

# Paths (no clean URLs)

Disallow: /*.php$

Disallow: /*?SID=

Disallow: /*PHPSESSID

Sitemap: https://www.example.com/sitemap.xml

Lets consider each groups of commands separately.

1.Stop crawling user account and checkout pages by search engine robot:

Disallow: /checkout/

Disallow: /onestepcheckout/

Disallow: /customer/

Disallow: /customer/account/

Disallow: /customer/account/login/

2.Blocking native catalog and search pages:

Disallow: /catalogsearch/

Disallow: /catalog/product_compare/

Disallow: /catalog/category/view/

Disallow: /catalog/product/view/

3.Sometimes Webmasters block pages with filters.

Disallow: /*?dir*

Disallow: /*?dir=desc

Disallow: /*?dir=asc

Disallow: /*?limit=all

Disallow: /*?mode*

4.Blocking CMS directories.

Disallow: /app/

Disallow: /bin/

Disallow: /dev/

Disallow: /lib/

Disallow: /phpserver/

Disallow: /pub/

5.Blocking duplicate content:

Disallow: /tag/

Disallow: /review/

6.Add the following code to the robots.txt file in order to hide specific pages:

User-agent: *

Disallow: /myfile.html

6.Don’t forget about domain and sitemap pointing:

Host: (www.)domain.com

Sitemap: http://www.domain.com/sitemap_en.xml

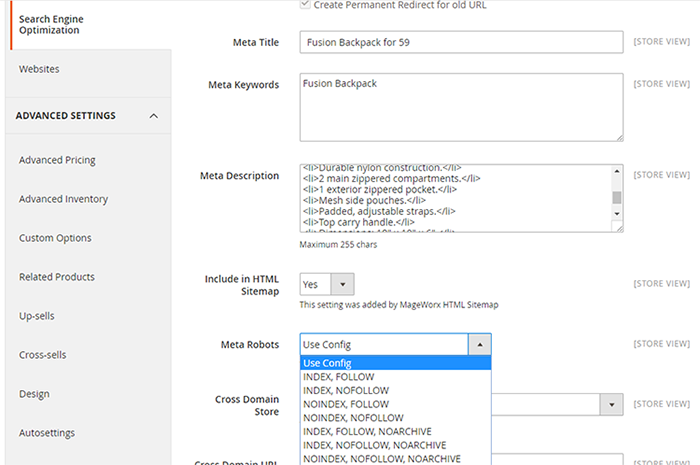

Meta robots tags: NOINDEX, NOFOLLOW

After configuring the robots.txt file, you can switch your attention to Nofollow and Noindex meta robots tags.

Meta robots tags are important for Magento 2 stores aiming for advanced control over page indexing. Positioned within a page's `

` section, these tags tell search engines precisely how to treat individual pages, distinguishing them from the broad directives of the robots.txt file.Types of Magento 2 Meta Robots tags

- noindex: perfect for Magento 2 pages you don't wish to index.

- nofollow: directs search engines to ignore the links on specific Magento 2 pages.

- noarchive: prevents caching of your Magento 2 page by search engines.

- nosnippet: ensures no part of the Magento 2 page appears as a snippet in search results.

How to configure the meta robots tags in Magento 2

To apply Nofollow or Noindex to your current configuration you can either update the robots.txt file or use meta name=“robots” tag.

Some possible combinations:

meta name="robots" content="index, follow"

meta name="robots" content="noindex, follow"

meta name="robots" content="index, nofollow"

meta name="robots" content="noindex, nofollow"

Tags Noindex and Nofollow has many advantages over blocking a page through robots.txt:

- Robots.txt prevents a page crawling during scheduled website crawling. However, this page could be found and crawled from other websites links.

- If a page has inbound links all juice will be transmitted to other website pages through this page internal links.

Mageworx SEO Suite extension

Using the instruction above, you will be able to manually configure the robots.txt file of your Magento 2 store and hide the unnecessary parts of code or spread the weight of pages. However, it's very important to review the changes regularly and adjust the meta robots configuration. Mageworx Magento 2 seo plugin provides advanced tools to do this for you:

- Set up meta robots for any product page or for products in bulk

- Set up meta robots for any category page

- Configure the meta robots for any CMS page

- Configure meta robots tag for any custom page in your Magento 2 store

- Advanced meta robots tag setup for layered navigation pages (for any attribute combinations, for category filter pages with multiple selections)

- and many other